27.4.3.1. Application Layer Frame Support

27.4.3.1.1. Baseline

27.4.3.1.2. General Concepts

Frame support was introduced with Suricata 7.0. Up until 6.0.x, Suricata's architecture and state of parsers meant that the network traffic available to the detection engine was just a stream of data, without detail about higher level parsers.

Note

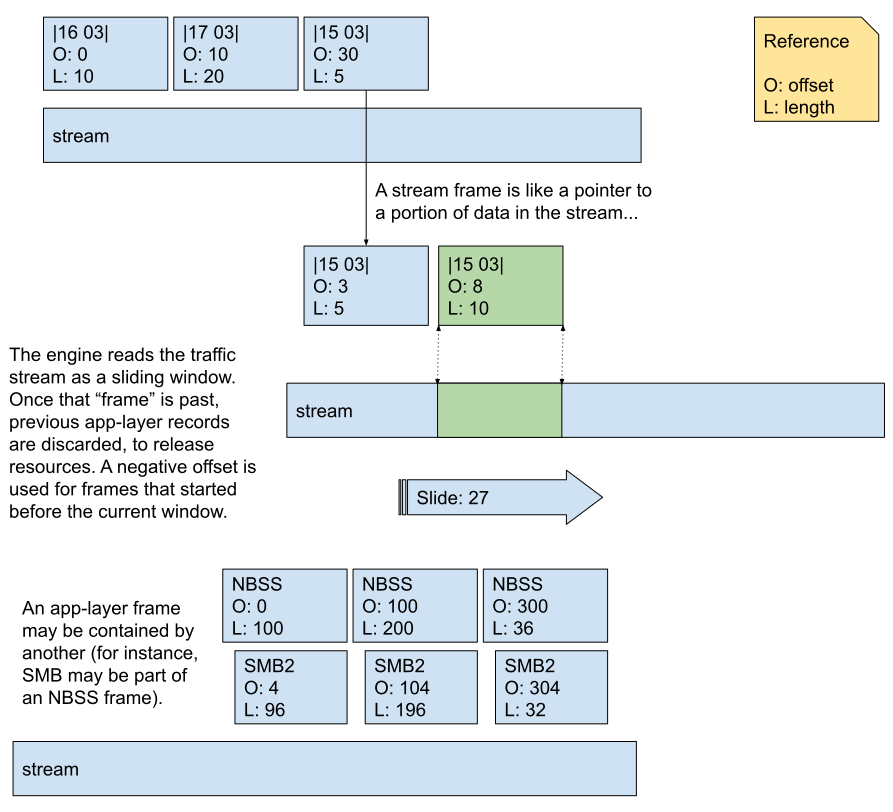

For Suricata, Frame is a generic term that can represent any unit of network data we are interested in, which could be comprised of one or several records of other, lower level protocol(s). Frames work as "stream annotations", allowing Suricata to tell the detection engine what type of record exists at a specific offset in the stream.

The normal pipeline of detection in Suricata implied that:

Certain rules could be quite costly performance-wise. This happened because the same stream could be inspected several times for different rules, since for certain signatures the detection is done when Suricata is still inspecting a lower level stream, not the application layer protocol (e.g., TCP traffic, in place of SMB one);

Rules could be difficult and tedious to write (and read), requiring that writers went in byte-detail to express matching on specific payload patterns.

What the Frame support offers is the ability to "point" to a specific portion of stream and identify what type of traffic Suricata is looking at. Then, as the engine reassembles the stream, one can have "read access" to that portion of the stream, aggregating concepts like what type of application layer protocol that is, and differentiating between header, data or even protocol versions (SMB1, SMB2...).

The goal of the stream Frame is to expose application layer protocol PDUs and other such arbitrary elements to the detection engine directly, instead of relying on Transactions. The main purpose is to bring TCP data processing times down by specialising/ filtering down traffic detection.

27.4.3.1.3. Adding Frame Support to a Parser

The application layer parser exposes frames it supports to the detect engine, by tagging them as they're parsed. The rest works automatically.

In order to allow the engine to identify frames for records of a given application layer parser, thought must be given as to which frames make sense for the specific protocol you are handling. Some parsers may have clear header and data fields that form its protocol data unit (pdu). For others, the distinction might be between request and response, only. Whereas for others it may make sense to have specific types of data. This is better understood by seeing the different types of frame keywords, which vary on a per-protocol basis.

It is also important to keep follow naming conventions when defining Frame Types. While a protocol may have strong naming standards for certain structures, do compare those with what Suricata already has registered:

hdr: used for the record header portiondata: is used for the record data portionpdu: unless documented otherwise, means the whole record, comprisinghdranddatarequest: a message from a client to a serverresponse: a message from a server to a client

27.4.3.1.3.1. Basic steps

Once the frame types that make sense for a given protocol are defined, the basic steps for adding them are:

create an enum with the frame types;

identify the parsing function(s) where application layer records are parsed;

identify the correct moment to register the frames;

use the Frame API calls directly or build upon them and use your functions to register the frames;

register the relevant frame callbacks when registering the parser.

Once these are done, you can enable frame eve-output to confirm that your frames are being properly registered. It is important to notice that some hard coded limits could influence what you see on the logs (max size of log output; type of logging for the payload, cf. https://redmine.openinfosecfoundation.org/issues/4988).

If all the steps are successfully followed, you should be able to write a rule using the frame keyword and the frame types you have registered with the application layer parser.

Using the SMB parser as example, before frame support, a rule would look like:

alert tcp ... flow:to_server; content:"|ff|SMB"; content:"some smb 1 issue";

With frame support, one is able to do:

alert smb ... flow:to_server; frame:smb1.data; content:"some smb 1 issue";

27.4.3.1.3.2. Implementation Examples & API Callbacks

Though the steps are the same, there are a few differences when implementing frame support in Rust or in C. The following sections elaborate on that, as well as on the process itself. (Note that the code snippets have omitted portions of code that weren't so relevant to this document).

27.4.3.1.3.2.1. Rust

This section shows how Frame support is added in Rust, using examples from the SIP parser, and the telnet parser.

Define the frame types. The frame types are defined as an enum. In Rust, make sure to derive from the AppLayerFrameType:

#[derive(AppLayerFrameType)]

pub enum SIPFrameType {

Pdu,

RequestLine,

ResponseLine,

RequestHeaders,

ResponseHeaders,

RequestBody,

ResponseBody,

}

Frame registering. Some understanding of the parser will be needed in order to find where the frames should be registered. It makes sense that it will happen when the input stream is being parsed into records. See when some pdu and request frames are created for SIP:

fn parse_request(&mut self, flow: *const core::Flow, stream_slice: StreamSlice) -> bool {

let input = stream_slice.as_slice();

let _pdu = Frame::new(

flow,

&stream_slice,

input,

input.len() as i64,

SIPFrameType::Pdu as u8,

);

SCLogDebug!("ts: pdu {:?}", _pdu);

match sip_parse_request(input) {

Ok((_, request)) => {

sip_frames_ts(flow, &stream_slice, &request);

self.build_tx_request(input, request);

return true;

}

Note

when to create PDU frames

The standard approach we follow for frame registration is that a frame pdu will always be created, regardless of parser status (in practice, before the parser is called). The other frames are then created when and if only the parser succeeds.

Use the Frame API or build upon them as needed. These are the frame registration functions highlighted above:

fn sip_frames_ts(flow: *const core::Flow, stream_slice: &StreamSlice, r: &Request) {

let oi = stream_slice.as_slice();

let _f = Frame::new(

flow,

stream_slice,

oi,

r.request_line_len as i64,

SIPFrameType::RequestLine as u8,

);

SCLogDebug!("ts: request_line {:?}", _f);

let hi = &oi[r.request_line_len as usize..];

let _f = Frame::new(

flow,

stream_slice,

hi,

r.headers_len as i64,

SIPFrameType::RequestHeaders as u8,

);

SCLogDebug!("ts: request_headers {:?}", _f);

if r.body_len > 0 {

let bi = &oi[r.body_offset as usize..];

let _f = Frame::new(

flow,

stream_slice,

bi,

r.body_len as i64,

SIPFrameType::RequestBody as u8,

);

SCLogDebug!("ts: request_body {:?}", _f);

}

}

Register relevant frame callbacks. As these are inferred from the #[derive(AppLayerFrameType)] statement, all that is needed is:

get_frame_id_by_name: Some(SIPFrameType::ffi_id_from_name),

get_frame_name_by_id: Some(SIPFrameType::ffi_name_from_id),

Note

on frame_len

For protocols which search for an end of frame char, like telnet, indicate unknown length by passing -1. Once the length is known, it must be updated. For those where length is a field in the record (e.g. SIP), the frame is set to match said length, even if that is bigger than the current input

The telnet parser has examples of using the Frame API directly for registering telnet frames, and also illustrates how that is done when length is not yet known:

fn parse_request(

&mut self, flow: *const Flow, stream_slice: &StreamSlice, input: &[u8],

) -> AppLayerResult {

let mut start = input;

while !start.is_empty() {

if self.request_frame.is_none() {

self.request_frame = Frame::new(

flow,

stream_slice,

start,

-1_i64,

TelnetFrameType::Pdu as u8,

);

}

if self.request_specific_frame.is_none() {

if let Ok((_, is_ctl)) = parser::peek_message_is_ctl(start) {

let f = if is_ctl {

Frame::new(

flow,

stream_slice,

start,

-1_i64,

TelnetFrameType::Ctl as u8,

)

} else {

Frame::new(

flow,

stream_slice,

start,

-1_i64,

TelnetFrameType::Data as u8,

We then update length later on (note especially lines 3 and 10):

1match parser::parse_message(start) {

2 Ok((rem, request)) => {

3 let consumed = start.len() - rem.len();

4 if rem.len() == start.len() {

5 panic!("lockup");

6 }

7 start = rem;

8

9 if let Some(frame) = &self.request_frame {

10 frame.set_len(flow, consumed as i64);

The Frame API calls parameters represent:

flow: dedicated data type, carries specific flow-related datastream_slice: dedicated data type, carries stream data, shown further bellowframe_start: a pointer to the start of the frame buffer in the stream (cur_iin the SMB code snippet)frame_len: what we expect the frame length to be (the engine may need to wait until it has enough data. See what is done in the telnet snippet request frames registering)frame_type: type of frame it's being registering (defined in an enum, as shown further above)

StreamSlice contains the input data to the parser, alongside other Stream-related data important in parsing context. Definition is found in applayer.rs:

pub struct StreamSlice {

input: *const u8,

input_len: u32,

/// STREAM_* flags

flags: u8,

offset: u64,

}

27.4.3.1.3.2.2. C code

Implementing Frame support in C involves a bit more manual work, as one cannot make use of the Rust derives. Code snippets from the HTTP parser:

Defining the frame types with the enum means:

enum HttpFrameTypes {

HTTP_FRAME_REQUEST,

HTTP_FRAME_RESPONSE,

};

SCEnumCharMap http_frame_table[] = {

{

"request",

HTTP_FRAME_REQUEST,

},

{

"response",

HTTP_FRAME_RESPONSE,

},

{ NULL, -1 },

};

The HTTP parser uses the Frame registration functions from the C API (app-layer-frames.c) directly for registering request Frames. Here we also don't know the length yet. The 0 indicates flow direction: toserver, and 1 would be used for toclient:

Frame *frame = AppLayerFrameNewByAbsoluteOffset(

hstate->f, hstate->slice, consumed, -1, 0, HTTP_FRAME_REQUEST);

if (frame) {

SCLogDebug("frame %p/%" PRIi64, frame, frame->id);

hstate->request_frame_id = frame->id;

AppLayerFrameSetTxId(frame, HtpGetActiveRequestTxID(hstate));

}

Updating frame->len later:

if (hstate->request_frame_id > 0) {

Frame *frame = AppLayerFrameGetById(hstate->f, 0, hstate->request_frame_id);

if (frame) {

const uint64_t request_size = abs_right_edge - hstate->last_request_data_stamp;

SCLogDebug("HTTP request complete: data offset %" PRIu64 ", request_size %" PRIu64,

hstate->last_request_data_stamp, request_size);

SCLogDebug("frame %p/%" PRIi64 " setting len to %" PRIu64, frame, frame->id,

request_size);

frame->len = (int64_t)request_size;

Register relevant callbacks (note that the actual functions will also have to be written, for C):

AppLayerParserRegisterGetFrameFuncs(

IPPROTO_TCP, ALPROTO_HTTP1, HTTPGetFrameIdByName, HTTPGetFrameNameById);

Note

The GetFrameIdByName functions can be "probed", so they should not generate any output or that could be misleading (for instance, Suricata generating a log message stating that a valid frame type is unknown).

27.4.3.1.4. Visual context

input and input_len are used to calculate the proper offset, for storing the frame. The stream buffer slides forward, so frame offsets/frames have to be updated. The relative offset (rel_offset) reflects that:

Start:

[ stream ]

[ frame ...........]

rel_offset: 2

len: 19

Slide:

[ stream ]

[ frame .... .]

rel_offset: -10

len: 19

Slide:

[ stream ]

[ frame ........... ]

rel_offset: -16

len: 19

The way the engine handles stream frames can be illustrated as follows: